On q-steepest descent method for unconstrained multiobjective optimization problems

Por um escritor misterioso

Last updated 10 novembro 2024

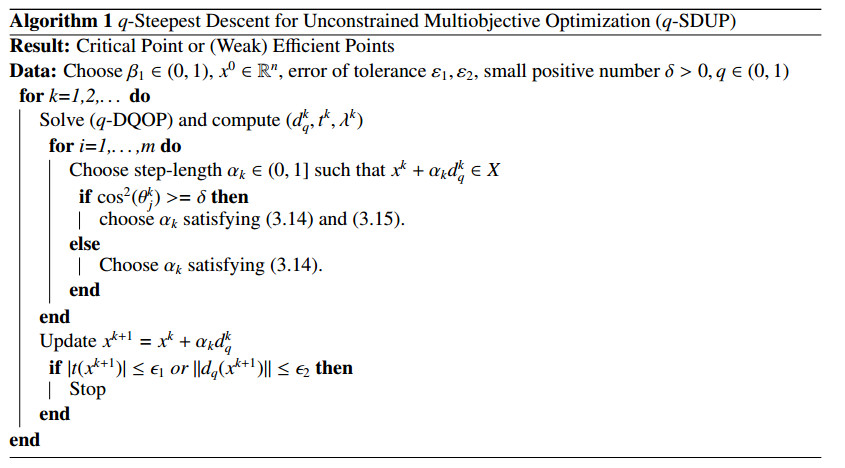

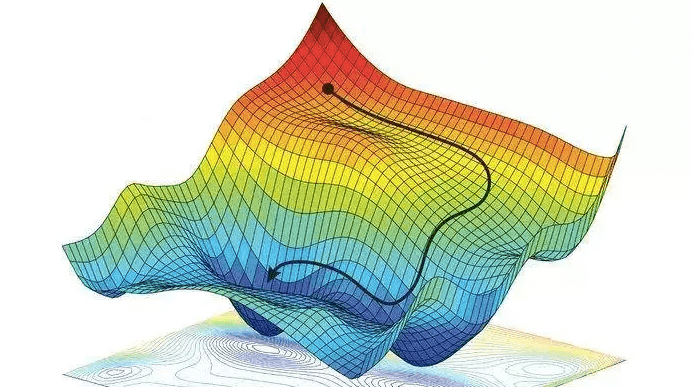

The <i>q</i>-gradient is the generalization of the gradient based on the <i>q</i>-derivative. The <i>q</i>-version of the steepest descent method for unconstrained multiobjective optimization problems is constructed and recovered to the classical one as <i>q</i> equals 1. In this method, the search process moves step by step from global at the beginning to particularly neighborhood at last. This method does not depend upon a starting point. The proposed algorithm for finding critical points is verified in the numerical examples.

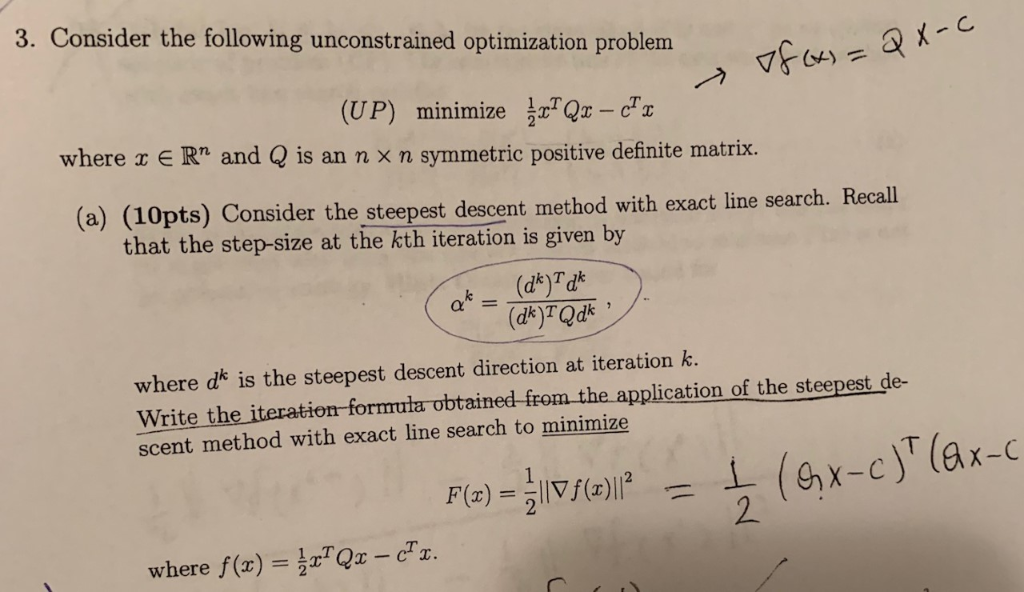

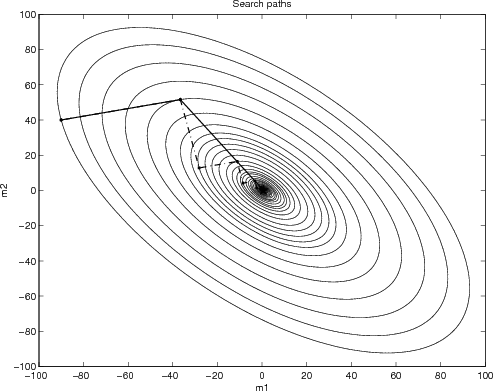

3. Consider the following unconstrained optimization

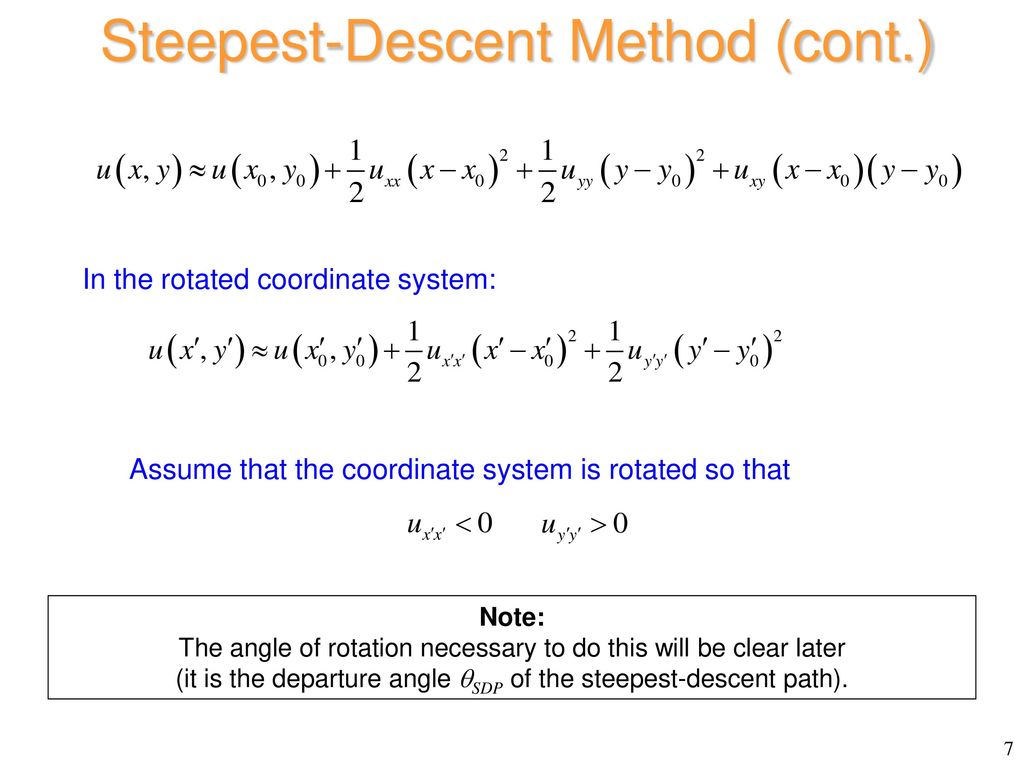

Chapter 4 Line Search Descent Methods Introduction to Mathematical Optimization

On the Complexity of Steepest Descent, Newton's and Regularized Newton's Methods for Nonconvex Unconstrained Optimization Problems

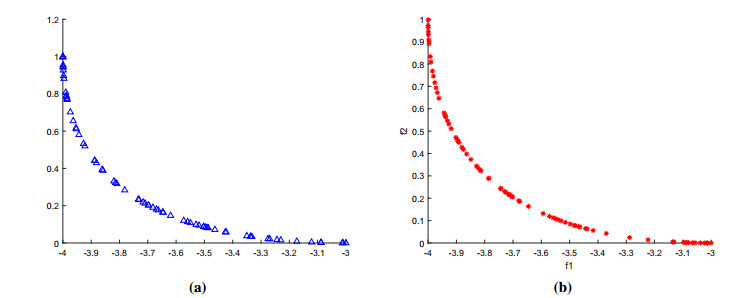

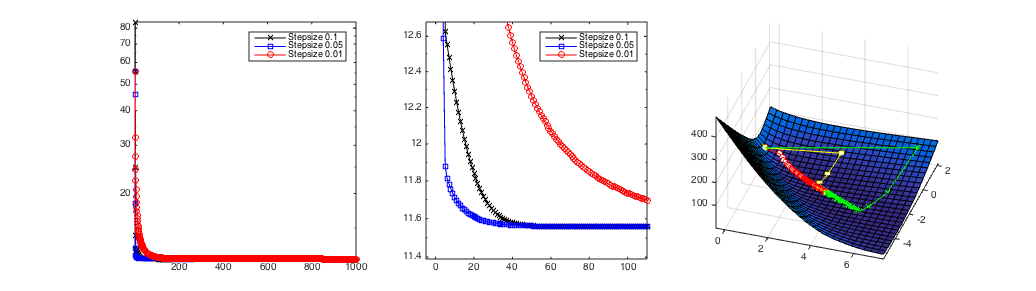

Performance of q-Steepest Descent Method.

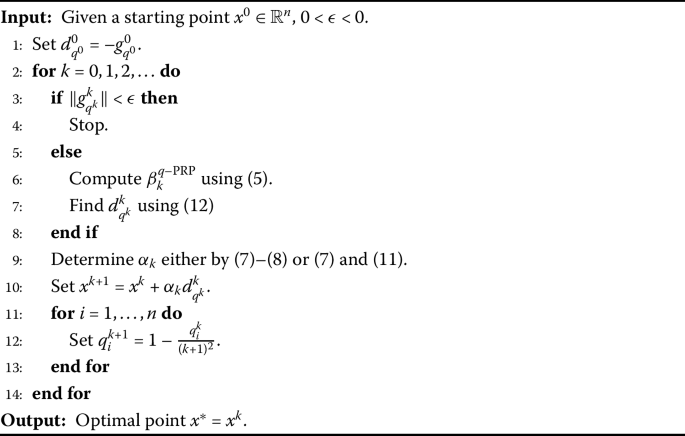

A q-Polak–Ribière–Polyak conjugate gradient algorithm for unconstrained optimization problems, Journal of Inequalities and Applications

On q-steepest descent method for unconstrained multiobjective optimization problems

What Is Optimization Toolbox?

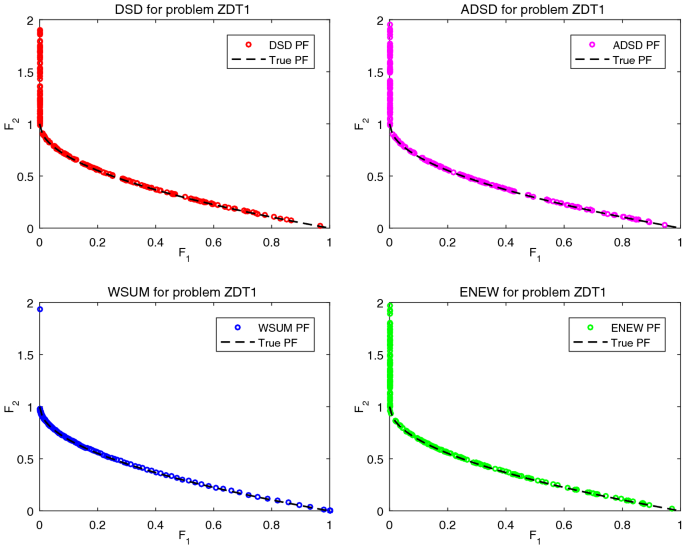

Accelerated Diagonal Steepest Descent Method for Unconstrained Multiobjective Optimization

20 Best Optimization Books of All Time - BookAuthority

Recomendado para você

você pode gostar

.png)

/i.s3.glbimg.com/v1/AUTH_08fbf48bc0524877943fe86e43087e7a/internal_photos/bs/2019/o/u/4dC0G9R1WGAQvcbGggfw/melies.png)