Fractal Fract, Free Full-Text

Por um escritor misterioso

Last updated 10 novembro 2024

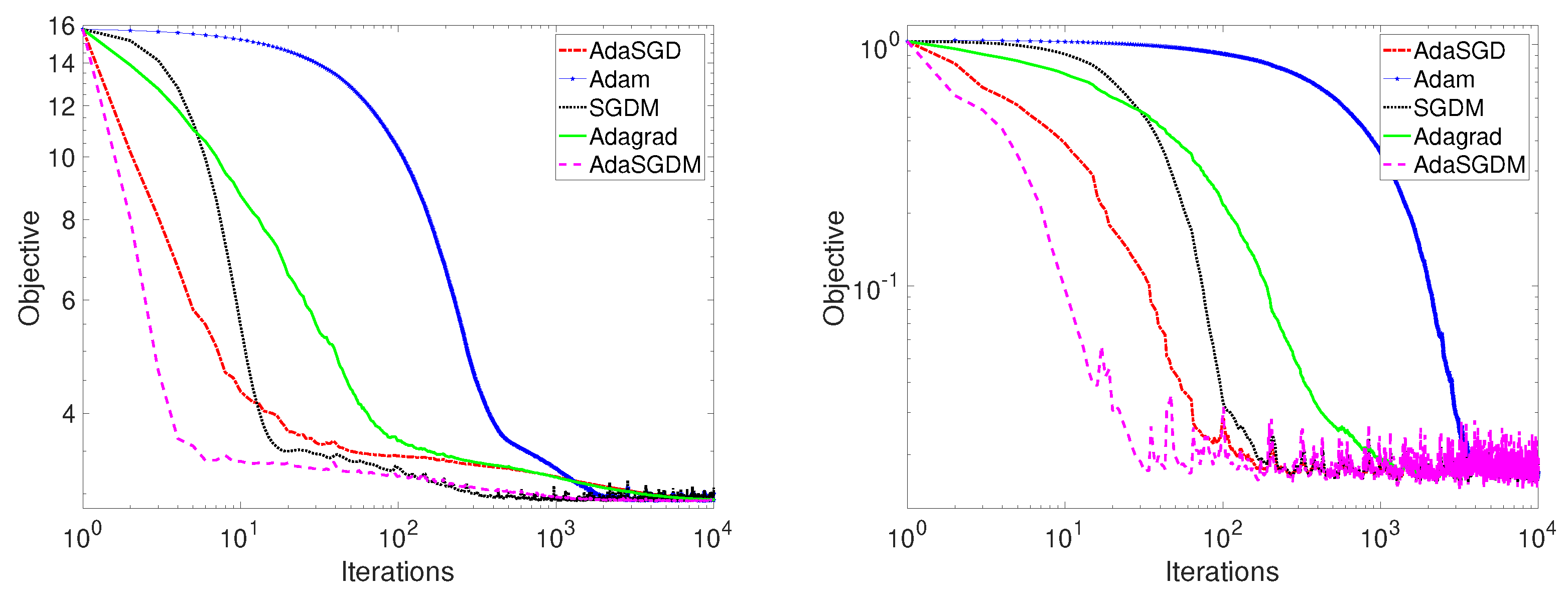

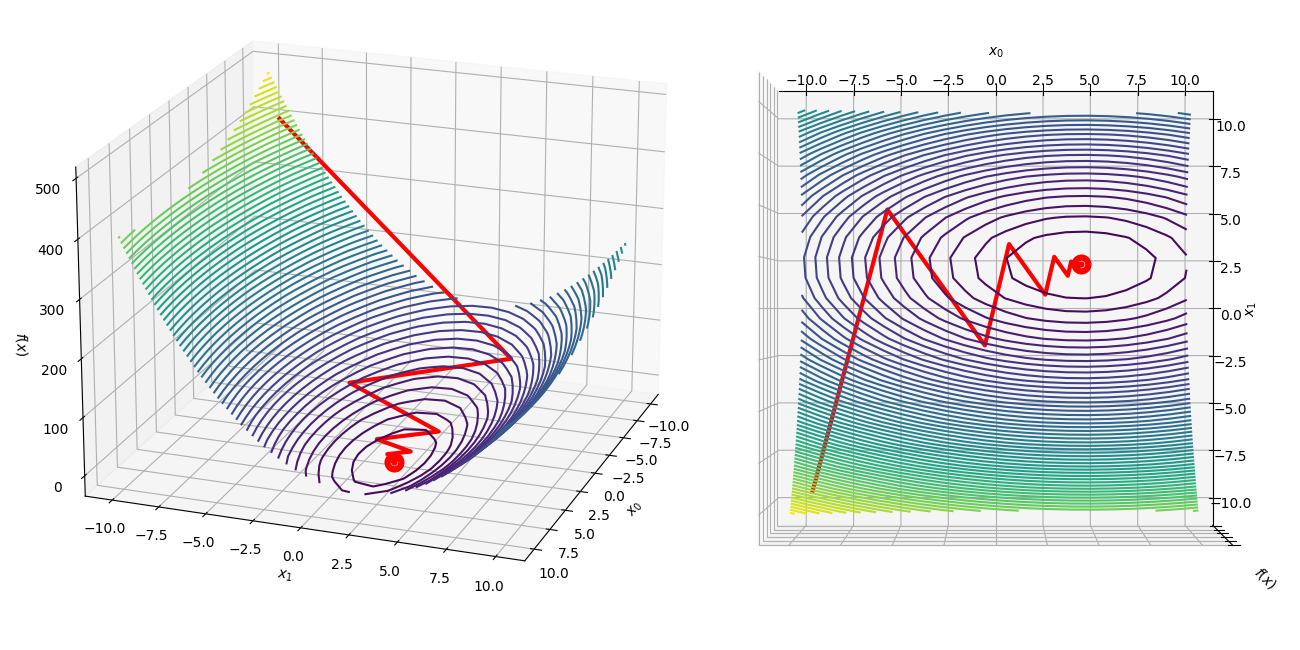

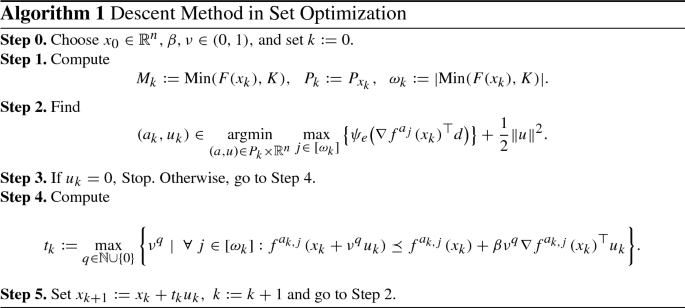

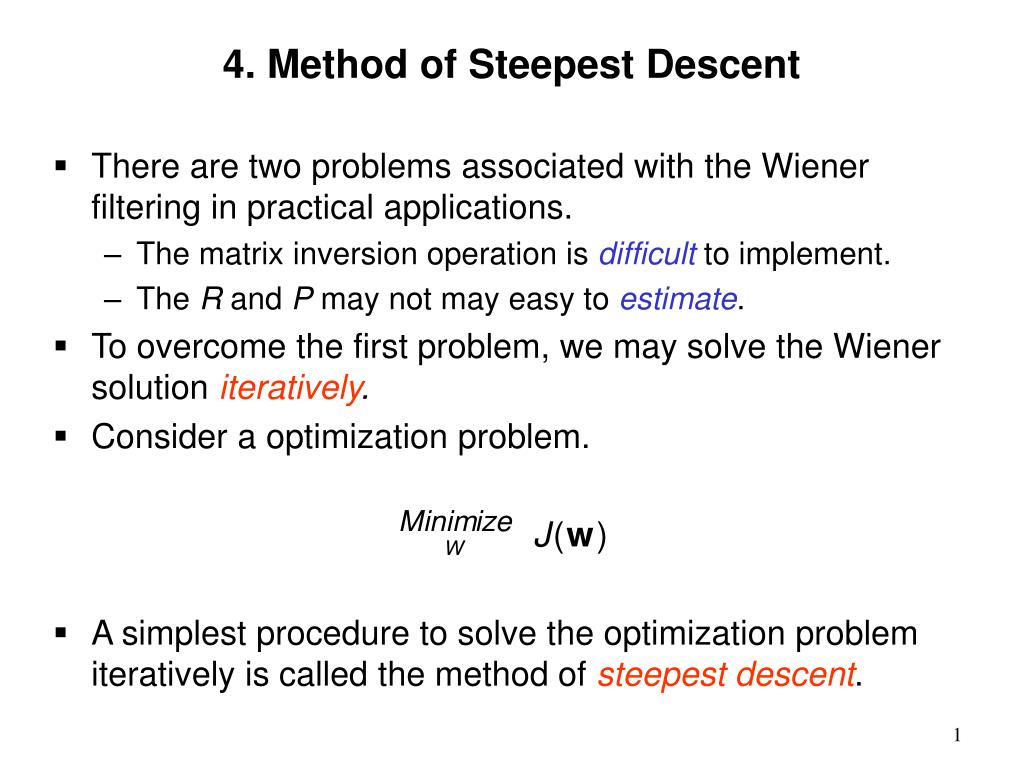

Stochastic gradient descent is the method of choice for solving large-scale optimization problems in machine learning. However, the question of how to effectively select the step-sizes in stochastic gradient descent methods is challenging, and can greatly influence the performance of stochastic gradient descent algorithms. In this paper, we propose a class of faster adaptive gradient descent methods, named AdaSGD, for solving both the convex and non-convex optimization problems. The novelty of this method is that it uses a new adaptive step size that depends on the expectation of the past stochastic gradient and its second moment, which makes it efficient and scalable for big data and high parameter dimensions. We show theoretically that the proposed AdaSGD algorithm has a convergence rate of O(1/T) in both convex and non-convex settings, where T is the maximum number of iterations. In addition, we extend the proposed AdaSGD to the case of momentum and obtain the same convergence rate for AdaSGD with momentum. To illustrate our theoretical results, several numerical experiments for solving problems arising in machine learning are made to verify the promise of the proposed method.

A Trader's Guide to Using Fractals

Fractal Level 86 Snowblind on a Budget Full Deal

Fractal Fract, Free Full-Text

:max_bytes(150000):strip_icc()/ATradersGuidetoUsingFractals3-8cd6ac59b8e142a8a28ba8cb42ea397d.png)

A Trader's Guide to Using Fractals

Fractal Paste - (8 Fluid Ounces)

Rybka 1.0 Get File - Colaboratory

The Pattern Inside the Pattern: Fractals, the Hidden Order Beneath Chaos, and the Story of the Refugee Who Revolutionized the Mathematics of Reality – The Marginalian

Fractal Font by Fractal font factory · Creative Fabrica

Fractals

Recomendado para você

você pode gostar

![Solved Steepest Descent Algorithm (1) 1. [20] Given a](https://media.cheggcdn.com/media/f07/f075a018-9b6b-4b54-a5dc-df5e2ad88f71/phprRGYwf)