8 Advanced parallelization - Deep Learning with JAX

Por um escritor misterioso

Last updated 15 novembro 2024

Using easy-to-revise parallelism with xmap() · Compiling and automatically partitioning functions with pjit() · Using tensor sharding to achieve parallelization with XLA · Running code in multi-host configurations

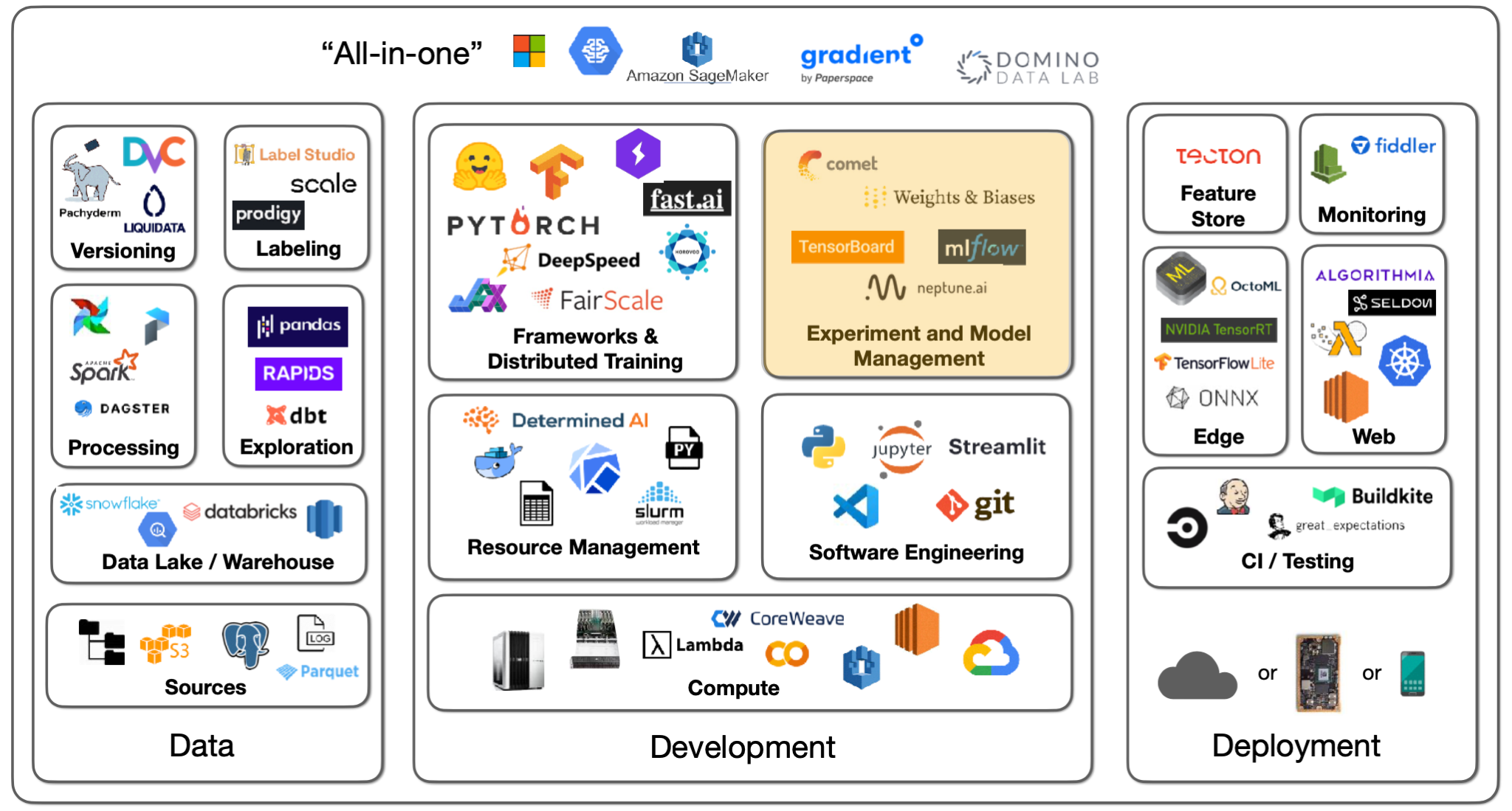

Lecture 2: Development Infrastructure & Tooling - The Full Stack

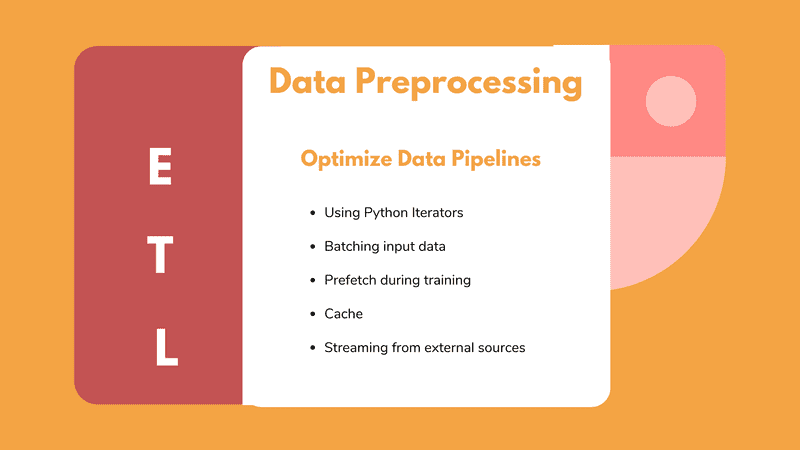

Data preprocessing for deep learning: Tips and tricks to optimize

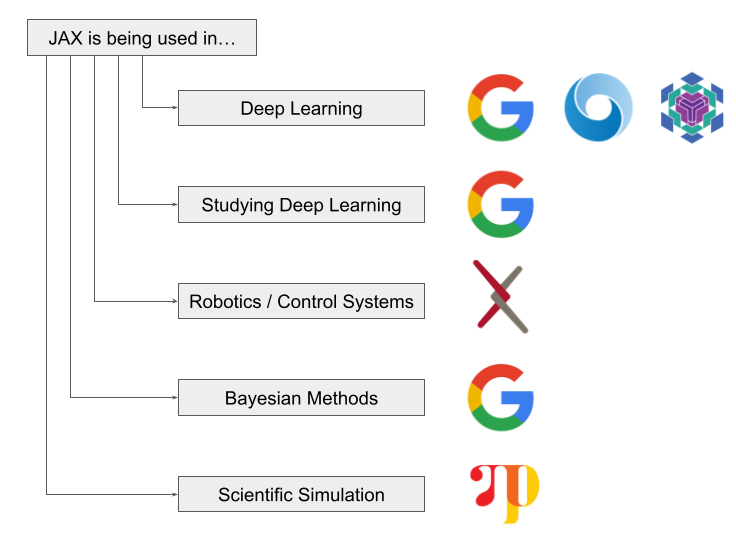

Why You Should (or Shouldn't) be Using Google's JAX in 2023

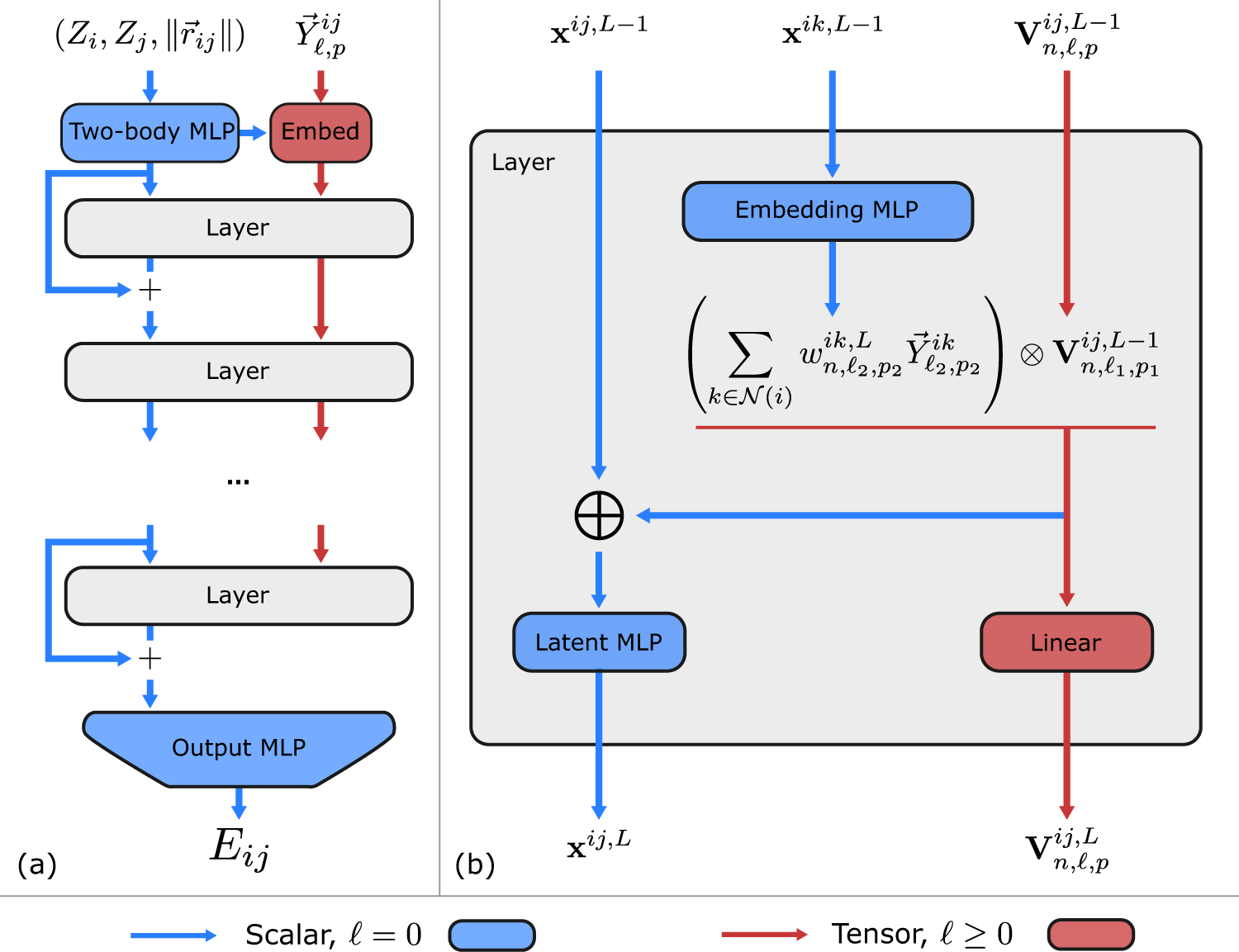

Learning local equivariant representations for large-scale

Dive into Deep Learning — Dive into Deep Learning 1.0.3 documentation

Grigory Sapunov on LinkedIn: Deep Learning with JAX

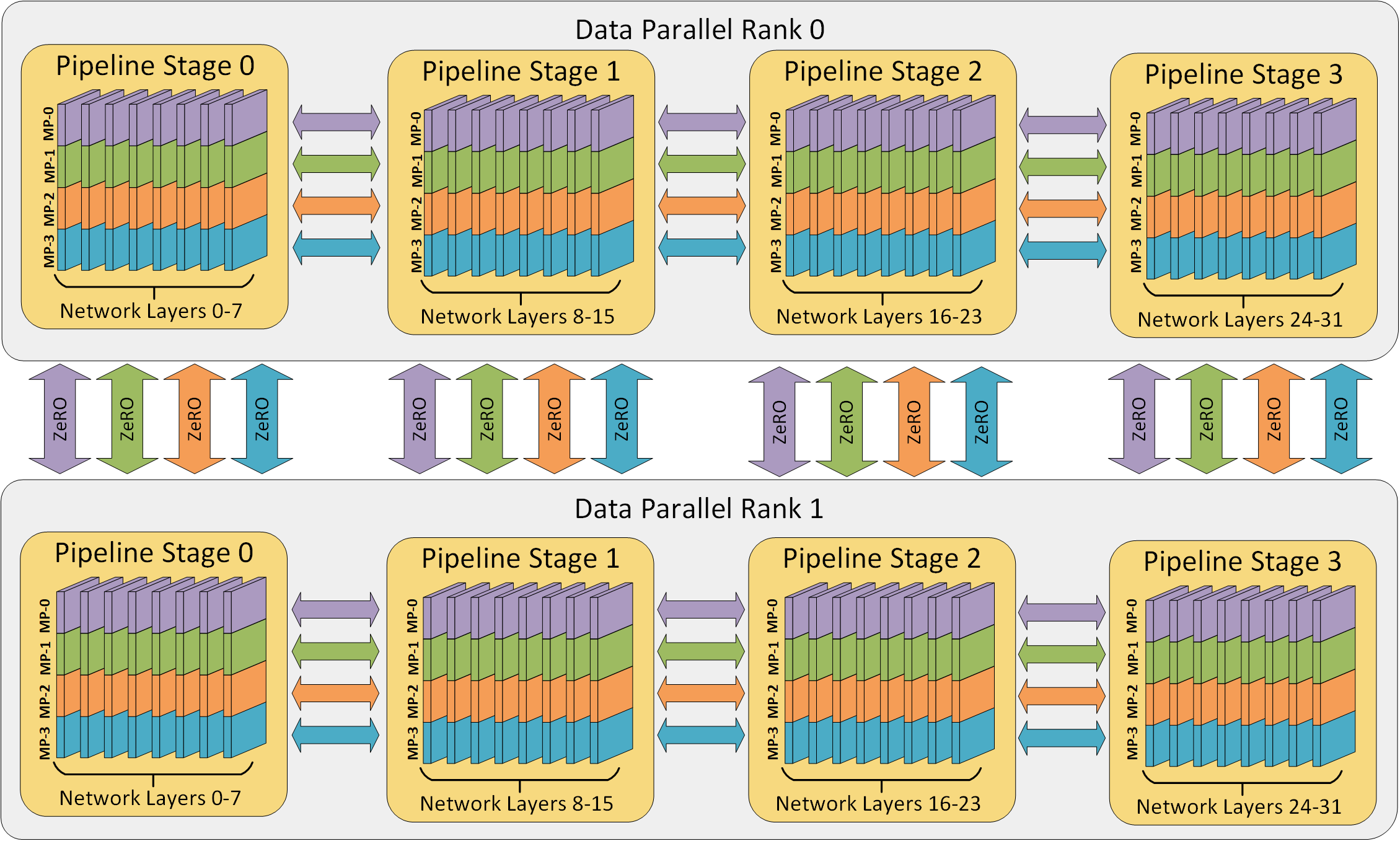

A Brief Overview of Parallelism Strategies in Deep Learning

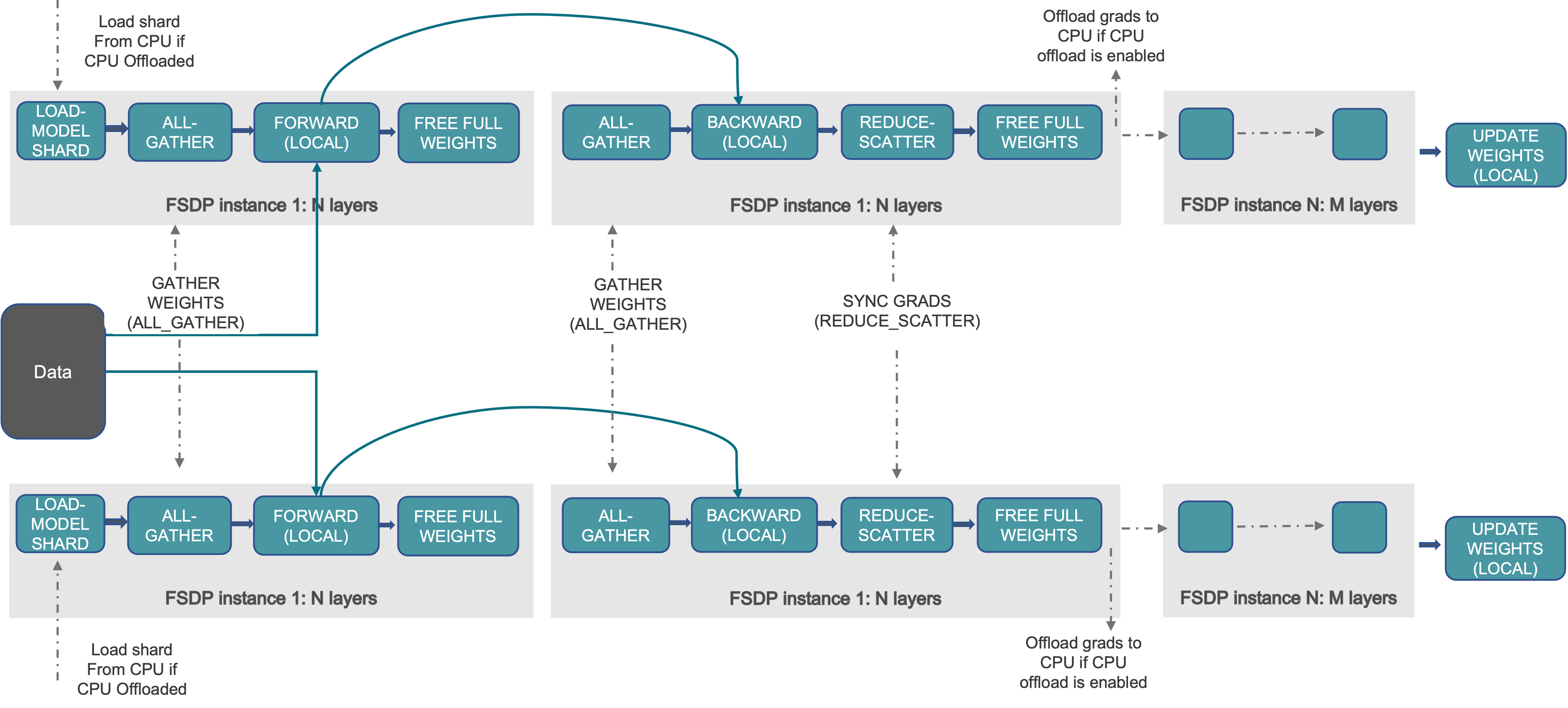

Introducing PyTorch Fully Sharded Data Parallel (FSDP) API

Compiler Technologies in Deep Learning Co-Design: A Survey

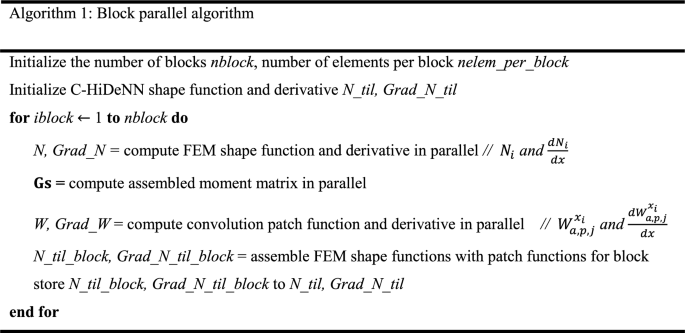

Convolution hierarchical deep-learning neural network (C-HiDeNN

GitHub - che-shr-cat/JAX-in-Action: Notebooks for the JAX in

Why You Should (or Shouldn't) be Using Google's JAX in 2023

Learn JAX in 2023: Part 2 - grad, jit, vmap, and pmap

Efficiently Scale LLM Training Across a Large GPU Cluster with

Recomendado para você

você pode gostar

_exploring-the-scp-foundation-scp-2951-10000-years-preview-hqdefault.jpg)

/i.s3.glbimg.com/v1/AUTH_bc8228b6673f488aa253bbcb03c80ec5/internal_photos/bs/2021/9/A/zdYcYqQKqFCj9kBOatjA/fifa-21-messi-99-perfeito.png)